NELS

A Never-Ending Learner of Sounds to hear and understand the Web.

Hearing machines that understand sounds like humans do require computational programs that can learn from years of accumulated diverse acoustics. They must use associated knowledge to guide subsequent learning and organize what they hear, learn names for recognizable events, scenes, objects, actions, materials, places, and retrieve sounds by reference to those names. These machines must also continuously improve their hearing competence to encompass all the diversity and scale of the acoustics in the world. Examples of applications are described in my blog post: Everyday, sounds occur around us. The ultimate goals of hearing machines inspired my PhD project called Never-Ending Learner of Sounds (NELS) [1,2], which began in 2016.

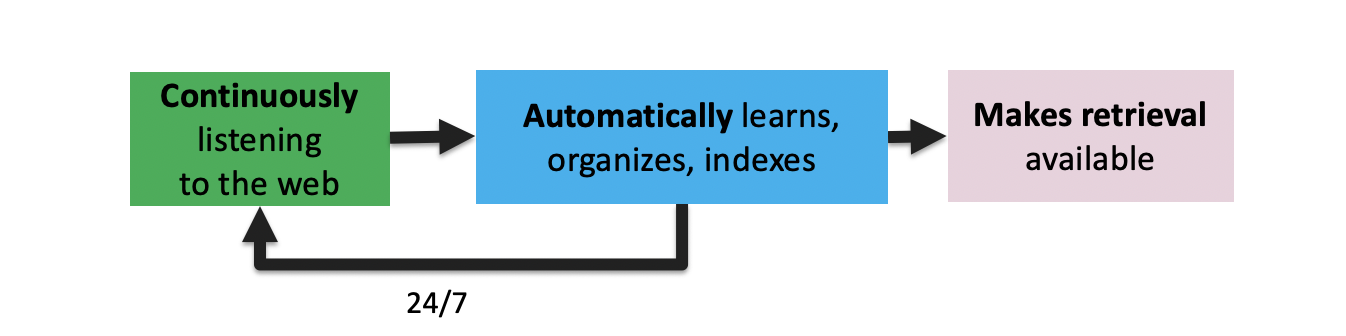

NELS aims to continuously hear and understand the Web, automatically learn new knowledge about sounds, organize and index audio content, and make the content available for people to query and recover all kinds of information. In doing this, we must address many challenges, for example, the Web has an unbounded number of sounds, training and evaluating Machine Learning models must deal with recordings that are noisy, unstructured, and unlabeled, we must define what knowledge to learn for sounds, and we must understand how to search for sounds, because describing acoustic phenomena in words can be hard. For example, the sound of water is one of the most distinguishable sounds, but how to describe it without using the word water?

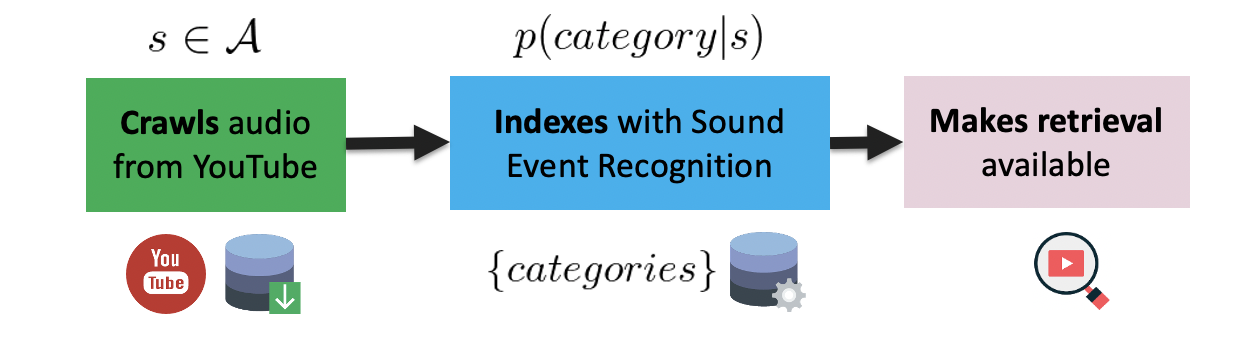

Broadly speaking, NELS crawls audio from YouTube and indexes it with Sound Event Recognition. I chose YouTube because it’s the largest archive of sounds and it resembles the diverse acoustics of the world. The downloaded audio is segmented and passed to a SER system that, depending on its posterior probabilities, will either discard the segment or index the segment with one of the pre-defined categories. The indexed segments are made available for search and retrieval through a website nels.cs.cmu.edu.

In the next project posts I will present research done in the different modules to advance the state of NELS. NELS is inspired by and aimed to emulate two similar and more mature projects at Carnegie Mellon University, the Never-ending Language learner led by Tom Mitchel [3] and the Never-ending Image learner led by Abhinav Gupta [4]. Since its inception in 2016, NELS research has been reported in publications in top venues, has motivated multiple international collaborations, and has been awarded multiple honors.

References

- Elizalde, Benjamin Martinez. “Never-Ending Learning of Sounds.” Diss. Carnegie Mellon University, 2020.

- Elizalde, Benjamin, Rohan Badlani, Ankit Shah, Anurag Kumar, and Bhiksha Raj. “Nels-never-ending learner of sounds.” NIPS Workshop of Machine Learning for Audio, 2017.

- Mitchell, Tom, William Cohen, Estevam Hruschka, Partha Talukdar, Bishan Yang, Justin Betteridge, Andrew Carlson et al. “Never-ending learning.” Communications of the ACM 61, no. 5 (2018): 103-115.

- Chen, Xinlei, Abhinav Shrivastava, and Abhinav Gupta. “Neil: Extracting visual knowledge from web data.” Proceedings of the IEEE international conference on computer vision. 2013.

Honors

- Won the 2017 Gandhian Young Technological Innovation Award (39/2915 winners).

- Finalist of the 2018 Qualcomm Innovation Fellowship Competition (30/174 finalists).

- Third place in 2018 IEEE DCASE’s Making Sense of Sounds Data Challenge (12 teams).

- Received financial support from Bosch Research in Pittsburgh 2019.

- Received financial support from the Sense of Wonder Group, and CONACyT.

Date: Published on Jan 2021 from work done during my PhD between 2015-2020.