Everyday, sounds occur around us.

Everyday situations are rarely silent, and in most cases, sounds occur around us, many of which can be interpreted to make us react accordingly.

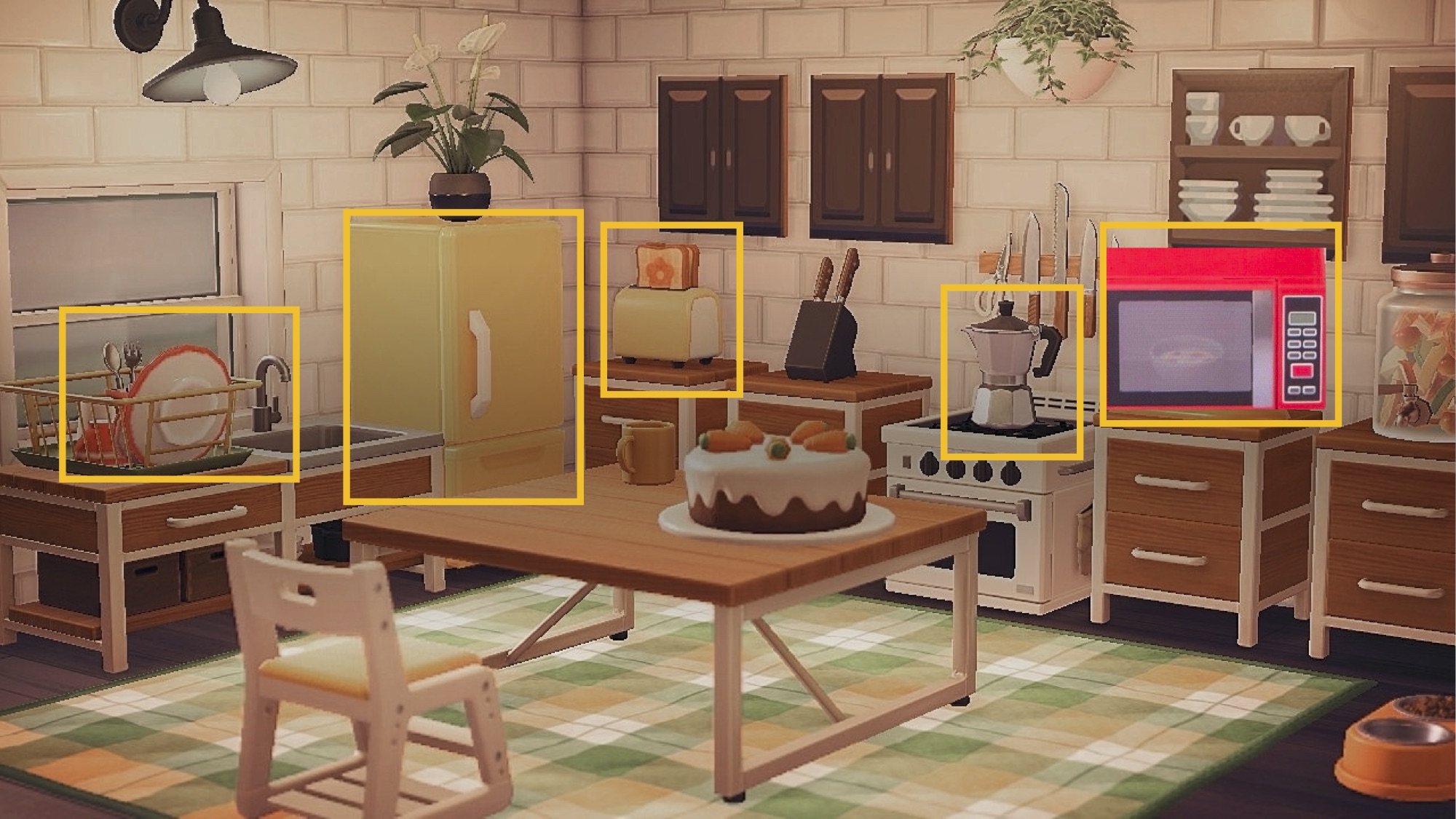

Think about how much information can be inferred by the acoustics in the kitchen depicted in the figure. Some sound events are produced in order to convey specific messages, such as a espresso pot whistling indicates that the water is hot, or the microwave's bell alerts that the food is ready. Other sounds occur without the intention of conveying a message, but based on the context, these sounds can be meaningful, such as the refrigerator's steady buzzing indicates that it's on and working normally, a toaster ejecting bread indicates it's toasted, and the scrubbing of ceramic plates with water flowing on and off indicates that someone is washing the dishes. This complex array of sound events represent an acoustic scene, which convey essential information in people's lives.

Acoustic Intelligence (or Sound Understanding or Audio Analytics) is a field that aims to build systems that can automatically recognize sounds in order to extract meaning that helps us react accordingly. This emerging field is part of Machine Hearing, and involves sound-related tasks that resemble human hearing, like speech and music processing, but also tasks that have nothing to do with human hearing, like sonography, seismic and sonar [1]. Acoustically intelligent systems are based on Artificial Intelligence and rely on Machine Learning and Audio Signal Processing. Such systems are being used in multiple applications.

• In Healthcare, Sound Intelligence has developed acoustic monitoring systems for hospital rooms to identify sounds that imply emergencies for patients in order to alert the caregiver, such as the sound of a person moaning.

• In 2020, the Apple Watch uses its motion sensors and microphones to detect hand-washing movements and water-flowing sounds. If the user finishes washing their hands too early, the watch will prompt a message to keep washing [2].

• For Smart Homes, Audio Analytics employ acoustic sensors to detect domestic and safety related sounds, such as dog barking, glass breaking and baby crying.

• Digital assistants identify human-produced sounds to enhance our interaction with them. For example, Amazon Alexa recognizes spoken language and will soon recognize laughter too. We could take laughter recognition one step further, if Alexa tells us a joke and recognizes our different types of laughter, it could adapt the jokes to our humor.

• In Automotive, Waymo's self-driving cars identify sounds to respond safely to emergency vehicles and traffic warnings [3]. For example, the sound of a wailing siren from an emergency vehicle can be heard miles away. Depending on where the sound is coming from, the car will react accordingly, if it's coming from behind, the car has to pull over, but if it's coming ahead the car has to stop.

• For Security and Surveillance in the urban context, sounds are identified to detect safety-related events, like glass breaking or gun shots. New York University and local authorities are doing this as part of the SONYC project, which uses acoustic sensors located across New York City's downtown.

• Acoustic monitoring of machinery identifies sounds to determine whether machines need to be repaired or replaced. For example, in 2020 Bosch Research presented a device called SoundSee which monitors machines in the International Space Station.

• Everyday people record their lives and shared them in social media. In fact, over 82% of the consumer traffic on the Internet are videos according to CISCO [4]. The soundtrack contains all kinds of sounds that can be used to index and organize content. Microsoft began offering recognition of audience reaction sounds (e.g. laughing, clapping, booing) for the Azure's Video Indexer tool in 2020. YouTube started in 2017 by adding captioning of three sounds to their videos (e.g. applause, music, laughter) [5].

Current products recognize a few sounds, but we should look into the next frontiers of Acoustic Intelligence. The next generation of the technology will aim to understand the meaning of sounds in a given context, similar to the Waymo example, and also will be able to generate sounds and soundscapes, for example in Augmented Reality setups. Software that processes sounds will be done in isolation, such as in acoustic monitoring systems for Assisted Living, and also in multimodal setups, combining for example speech, text, images and haptic feedback. On the hardware side, audio will be processed by any device with a microphone, such as headphones, hearing aids, speakers, and acoustic sensors. Applications will include Entertainment, Health, Automotive, Surveillance and Monitoring. Consumer products are expected to grow exponentially their market size and reach USD 4.42 billion by 2025 [6]. Acoustic Intelligence will enhance how computers hear beyond speech and will be part of our everyday lives.

References

- Lyon, Richard F. “Machine hearing: An emerging field [exploratory dsp].” IEEE signal processing magazine 27.5 (2010): 131-139.

- Gillespie, Claire. (2020, June 23). Apple Watch’s New Handwashing Feature Will Detect and Time How Long You Wash Up

- Waymo. (2017, July 10). “Recognizing the sights and sounds of emergency vehicles”

- CISCO. (2020, March 9). “Cisco Annual Internet Report (2018–2023)”

- Chaudhuri Sourish.(2017, March 23). “Adding Sound Effect Information to YouTube Captions”

- Grand View Research. (2019, December). “Sound recognition market worth 4.42 billion by 2025”